Read time: 11 minutes

1. Introduction

The world of artificial intelligence assistants is growing with such velocity that it doesn’t surprise us anymore and it's about to be ubiquitous.

Artificial Intelligence is a concept developed as a program with abilities to solve problems on its own for specified tasks like speech recognition. It is a term that we are all probably aware of usually I think via science fiction, so we tend to think about things like Skynet and the Terminator, which certainly gives us at least a rather bleak glimpse into the future of AI.

Most of us already engage with AI algorithms on a daily basis: search engines, transportation, healthcare, personal finance, aviation, and many more.

I believe that what Artificial Intelligence ultimately comes down to is emulating our cognition into machines, it is about amplifying the most powerful phenomenon in the universe which is intelligence. We are still learning through reverse engineering to understand and replicate intelligence.

In real-life scenarios, AI implementation requires high-quality data for which machines or software are used, in order to simulate human intelligence in accompassing planning, learning from past experiences, communication, perception, and the ability to move and manipulate objects.

Most of the development in AI is associated with benefits to humanity, everything from face and voice recognition to the latest self-driving cars.

The advances in this domain have been made possible by a scientific field called Machine Learning, which is one of the key ways to mimic a human mind by continuous learning and the ability to identify patterns in streams of data.

Machine learning can be transmuted in two major components, one that uses algorithms to find meaning in random and scrambled data, and the second part that uses learning algorithms to find relationships between that knowledge and improving machine performance on certain tasks. For example, ML is the area of computing that is improving the way we use our smartphones.

Conversational interfaces (CI) became one of the main digital marketing trends of last years. In the words of Ev Williams, CEO of Medium, a partner at Obvious Ventures and co-founder of Twitter, “the future operating system for humanity is the conversation.” Conversational interfaces are not just a fun thing, but a great opportunity for brands to be in close communication with their target audience.

In this article we intend to learn how to design and create a skill for a personal voice-controlled assistant from Amazon known as - Alexa - the cloud-based service that handles all the Natural Language Understanding, speech recognition, and machine learning for all Alexa enabled devices.

The Amazon Echo is the first and best-known endpoint of Alexa Ecosystem that was built to make life easier and more enjoyable, simplifying everyday actions with voice commands.

The natural language processing of skill-building is handled on Amazon's side, the only thing that developers have to do is to provide the information that tells Alexa what to listen for and how it should respond. So, building a skill is easier than we initially expect. :)

2. Creating an Amazon Alexa Skill

It is important to design our skill before we start creating it. Prepare an outline or schema of the possible intents and entities that are relevant to the domain-specific topic of our application.

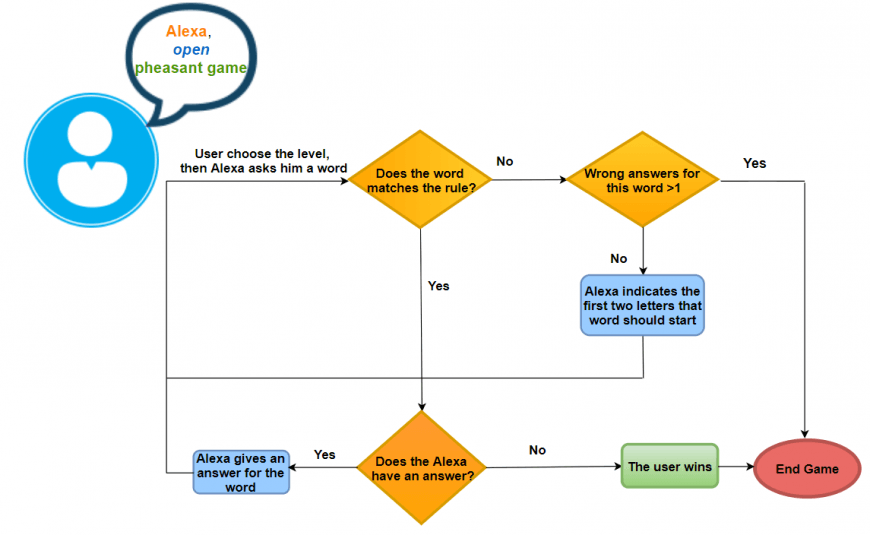

We always like to add a little happiness to every day, so let’s create a simple skill that can play (Pheasant) game - (in Romania, it is a popular game) a wordplay in which one of the players says a word and the other player has to respond with another word that starts with the last two letters of the given word. When one of the players does not find a word, the player is declared defeated.

As we can see, we need to build a dictionary of words on different levels of complexity.

For this point, we (will) can use a DynamoDB database with words from an Oxford Dictionaries API. The vocabulary service supports content negotiation to provide the most appropriate representation for word lookups on multiple filters with different parameters.

All skills, like web or mobile applications, contain two parts: Interaction Model (the frontend) and the Hosted Service (the backend).

- Interaction Model - the voice user interface that defines what functionalities or behaviors the skill is able to handle.

- Hosted Service - the programming logic, hosted on the internet, that responds to a user's requests.

How to create a Lambda function using Serverless Framework

The serverless framework is truly a blessing for programmers because we don’t have to worry anymore about the servers’ management. We just focus on building the application.

What’s very important to know is that we can use this framework on all operating systems and start to create Lambda functions in different programming languages, like C#, Java, NodeJs, Python, Go, and even other languages coming in the future.

To install the framework you need to ensure that you have Node.js on your system from the terminal window. After that, you would be able to create a C# template application with the CLI

sls create --template aws-csharp --path myService

In the path that you specified you find a project with the following structure:

In the serverless.yml file, you will need to describe the setup and the resources you need for the Lambda function, the framework version, stage, and region.

service: PheasantGame

provider:

name: aws

runtime: dotnetcore1.0

stage: dev

region: eu-west-1

profile: maria_developer

package:

artifact: bin/release/netcoreapp1.0/deploy-package.zip

functions:

pheasantSkill:

handler: CsharpHandlers::AwsDotnetCsharp.Handler::Pheasant

events:

- alexaSkill

By running the sls deploy command, serverless will create an Amazon CloudFormation stack and it will deploy your function to AWS Lambda service.

3. Building the Lambda function

A Lambda function is a compute service that runs your code in response to multiple triggers by events or reacts to sensor readings from an IoT connected device that operates at AWS performance, capacity provisioning, automatic scaling, security and code logging and monitoring, such as table updates in Amazon DynamoDB, HTTP requests via Amazon API Gateway, modifications to objects in Amazon S3 buckets, etc.

Going forward, we start to write the lambda function in C# that responds to a users’ voice with a personal welcome message with custom messages.

For this, we have to get the Lambda template which is in the AWS Toolkit for the integrated development environment where we are practicing.

For managing user interactions with Alexa we have used a library Alexa.NET - a simple .NET Core library for handling Alexa Skill request/responses.

This library has a SkillResponse class that we extended to suit our purpose and we have done so by making use of the Wrapper pattern which is quite an elegant way to extend class functionality and also aligns itself nicely with the Open/Closed principle of SOLID.

public class SkillResponseWrapper

{

public SkillResponse SkillResponse { get; set; }

public SkillResponseWrapper(SkillResponse skillResponse, ResponseBody responseBody, IOutputSpeech outputSpeech)

{

SkillResponse = skillResponse;

SkillResponse.Response = responseBody;

SkillResponse.Response.OutputSpeech = outputSpeech;

SkillResponse.Response.ShouldEndSession = false;

SkillResponse.Version = "1.0";

}

}

}

In the handler method, we create a Response object that corresponds to each use case.

public class Function

{

protected readonly Container _container;

public SkillResponse SkillResponse { get; set; }

public Function()

{

_container = new Container(new ApplicationRegistry());

SkillResponse = _container.GetInstance().SkillResponse;

}

public SkillResponse FunctionHandler(SkillRequest skillRequest, ILambdaContext context)

{

return GetResponse(skillRequest, context);

}

It has to be able to handle various types of requests.

LaunchRequest - first message when the user invokes the skill.

IntentRequest - the function gets a request to one of the intents defined in your intent schema.

SessionEndedRequest - the user wants to end the session.

public SkillResponse GetResponse(SkillRequest skillRequest, ILambdaContext context)

{

if (skillRequest.GetRequestType() == typeof(LaunchRequest))

{

SkillResponse = ResponseBuilder.Ask(MessageResponse(welcomeMessages.ElementAt(new Random().Next(0, welcomeMessages.Count))), new Reprompt());

}

else if (skillRequest.GetRequestType() == typeof(IntentRequest))

{

var intentRequest = skillRequest.Request as IntentRequest;

switch (intentRequest.Intent.Name)

{

case "PlayIntent":

SkillResponse = PlayIntentResponse(intentRequest, context);

break;

case "AMAZON.YesIntent":

SkillResponse = ResponseBuilder.Ask(MessageResponse(Resource.PlayAnotherGameConfirmMessage), new Reprompt());

break;

case "AMAZON.NoIntent":

SkillResponse = levelLife.Equals(2)

? ResponseBuilder.Ask(MessageWhenUserLose(), new Reprompt())

: ResponseBuilder.Ask(MessageResponse(string.Format(Resource.HintWithTheLastTwoLettersMessage, lastTwoLetters)), new Reprompt());

break;

case "HelpIntent":

SkillResponse = ResponseBuilder.Ask(MessageResponse(Resource.HelpMessage), new Reprompt());

break;

case "AMAZON.StopIntent":

SkillResponse = ResponseBuilder.Ask(MessageResponse(Resource.EndSessionMessage), new Reprompt());

SkillResponse.Response.ShouldEndSession = true;

break;

default:

SkillResponse = ResponseBuilder.Ask(MessageResponse(Resource.MisunderstoodMessage), new Reprompt());

break;

}

}

return SkillResponse;

}

For every game, the main goal is to win. What would you say if the user wins and Alexa will award him with a positive and funny sound that will make this an enjoyable experience?

In this case, we can use Speech Synthesis Markup Language (SSML) to get the control over the Alexa responses. Using SSML markup tags in the speech we can change the rate, volume, pause, or to embed our own audio files.

private SsmlOutputSpeech MessageWhenUserWins()

{

return new SsmlOutputSpeech

{

Ssml = "I don't know a word in this case. You win this level."

};

}

Keep in mind that the sounds must be a valid MP3 file with a sample rate of 16000 Hz and it can't be longer than ninety seconds.

Once we have built the Lambda function we are ready for the deployment procedure via CloudFormation.

As the serverless documentation describes, feel free to customize this command by providing the stage, the region or the profile name for which you want to upload or update the function.

One more thing before the deployment departure to cloud.

In order to deploy the Lambda function on AWS Lambda Service, you have to create an account on https://console.aws.amazon.com/ and generate a profile with permissions that we need.

To connect the frontend to a Lambda function (the backend) we must provide its Amazon Resource Name (ARN), which is a unique identifier that represents the address of our Lambda function.

Afterward, we need to create a custom interaction model for the skill that binds the user interaction with the application. We will do that using the https://developer.amazon.com/. After we choose a name and an invocation name you should build the Intent Schema.

It is considered best practice to use only as many intents as you need to perform the functions of your application. In this skill, we intend to get from the user the level and the words during the wordplay.

{

"interactionModel": {

"languageModel": {

"invocationName": "game",

"intents": [

{

"name": "PlayIntent",

"slots": [

{

"name": "Word",

"type": "AMAZON.Language"

}

],

"samples": [

"{Word}",

"I say {Word}"

]

},

{

"name": "AMAZON.HelpIntent",

"samples": [

"give me a hint",

"what should I say"

]

},

{

"name": "AMAZON.YesIntent",

"samples": [

"I want to play one more time",

"I want to play again"

]

},

{

"name": "AMAZON.NoIntent",

"samples": [

"I don't know",

"I am not sure",

"I don't know a word"

]

},

{

"name": "AMAZON.StopIntent",

"samples": [

"stop",

"exit"

]

}

]

}

}

}

Intent Request maps to onIntent() and occurs where the user specifies an intent.

To improve the skills’ natural language understanding and to design an open-ended experience that handles the user’s expectations elegantly, Amazon provides a Machine Learning cloud service for the model training process. To complete this step, in the Utterances section we add some examples of user questions or commands that we expect the user maps to a specific intent.

Skill testing is the process that provides the level of knowledge that has been acquired. The important part is to employ manual testing to prove out the user experience, the code, and the interaction model.

If we want to test your skill and you don’t have any Amazon device, then EchoSim is the best solution.

Also, unit testing, as part of test-driven development, is the next central aspect to testing and automation and represents a level of the testing process where individual major pieces of functionality are tested.

There are frameworks that help us create accurate unit tests.

Although it may sometimes seem difficult, the benefits it brings are incomparable for the quality of the application, the code maintenance, and last but not least the developer progress.

In .NET, one of the most popular testing frameworks is xUnit, an open-source testing platform with a larger focus on simplicity and usability.

Let’s start to create some simple unit tests for our skill.

We will use the IntentRequest and Response examples files.

We aim to make sure that the Skill request object has been created correctly, in order to get the response according to user intent.

[Fact]

public void IntentRequest_should_be_read()

{

var request = GetObjectFromExample("PlayIntentRequest.json");

Assert.NotNull(request);

Assert.Equal(typeof(IntentRequest), request.GetRequestType());

}

[Fact]

public void SlotValue_shoul_be_read()

{

var convertedObj = GetObjectFromExample("PlayIntentRequest.json");

var request = Assert.IsAssignableFrom(convertedObj.Request);

var slot = request.Intent.Slots["word"];

Assert.Equal("smile", slot.Value);

}

[Fact]

public void Should_create_same_json_response_as_example()

{

var skillResponse = new SkillResponse

{

Version = "1.0",

SessionAttributes = new Dictionary

{

{

"SessionVariables" , new

{

GameStatus = "start",

Level = "easy"

}

}

},

Response = new ResponseBody

{

OutputSpeech = new PlainTextOutputSpeech

{

Text = "Let's start! Please say a word."

},

ShouldEndSession = false

}

};

var responseJson = JsonConvert.SerializeObject(skillResponse, Formatting.Indented, new JsonSerializerSettings

{

ContractResolver = new CamelCasePropertyNamesContractResolver()

});

const string example = "Response.json";

var expectedJson = File.ReadAllText(Path.Combine(FilePath, example));

Assert.Equal(expectedJson, responseJson);

}

4. Conclusion

Creating your program developed through the computer, made to simulate an intelligent conversation or chat, through “Artificial Intelligence” over a vocal message can become a natural conversation between friends.

Voice assistants are quickly evolving to provide more capabilities and value to users. As advances are made in the fields of natural language processing and speech recognition, the agents’ ability to understand and carry out multi-step requests will integrate them further into daily activity workflows.

Once you start interacting with Alexa, it becomes so comfortable that you start wanting more from it and you realize that a voice interface isn't something that's foreign or not to be adopted. A pathway to grow your business. It's more efficient and also, a time-saving opportunity in fields like agency marketing as a content provider, selling products as a brand so... It's time to experiment with it!

We hope our explanation on how to create a custom Alexa skill using AWS Lambda and Serverless framework was clear enough. Serverless Architecture covers a significant number of positive aspects, including reduced operational and development costs, easier operational management, and reducing the environmental impact.

If you want to find out more information on Serverless Computing you are kindly invited to read our article on the Pros and Cons of Serverless Computing.FaaS comparison: AWS Lambda vs Azure Functions vs Google Functions.